Hi all,

A few months ago I started a proof of concept to develop a fast Python library for physical units (because we don’t have enough of them ![]() ) called fastunits. This is unrelated to the prototype @seberg presented today at SciPy 2022, which uses dtypes instead.

) called fastunits. This is unrelated to the prototype @seberg presented today at SciPy 2022, which uses dtypes instead.

The current philosophy of fastunits is that Quantity wraps a value and a unit. This is how it should look like:

import numpy as np

from fastunits.??? import ??? as u # TBC

qv1 = [1, 0, 0] << u.m

# Under the hood, this does

# qv1 = ArrayQuantity.from_list([1, 0, 0], u.m)

qv2 = [0, 1, 0] << u.cm

qv3 = np.random.randn(10_000) << u.m

print(qv1 + qv2)

As you can see, it’s heavily inspired by astropy.units, but I want to achieve at least a 10x improvement in performance by sacrificing some backwards compatibility and assumptions.

However, I have two questions on how to make this integrate nicely with the rest of the Scientific Python ecosystem:

- Should I make

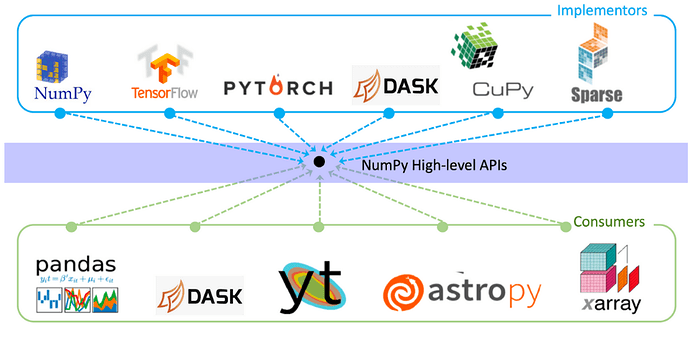

*Quantityobjects wrap any object conforming to the Python array API standard? This supposedly should allow folks to usefastunitsnot only with NumPy arrays but also any of the “implementors” (as described in array-api#1). However, after reading various NEPs and GitHub comments here and there, I’m not at all sure if the image below reflects the current consensus, an up-to-date list of implementors, etc.

- Should I make

*Quantitybe an Array API implementor? My gut feeling was that anything that is not purely numerical arrays is beyond what the spec covers, and @seberg confirmed that intuition to me yesterday. However, there could be more clarity about what users expect in terms of convenience features. In other words: shouldnumpy.add(q1, q2)return afastunits.*Quantity? The alternative is that users need to wrap/unwrap quantities every time, or do things like

import fastunits.numpy_compat as funp

q = funp.add(q1, q2)

which is inconvenient.

As far as I understand, this should be done with NEP 18 __array_function__, but the draft of NEP 37 recollects contains several discouraging paragraphs, like (emphasis mine)

__array_function__has significant implications for libraries that use it: […] users expect NumPy functions likenp.concatenateto return NumPy arrays. […] Libraries like Dask and CuPy have looked at and accepted the backwards incompatibility impact of__array_function__; it would still have been better for them if that impact didn’t exist".

I feel like there are a lot of painful conversations behind these words, but as an outsider, I have zero context of what happened, and more importantly I’m not sure what is the blessed way to proceed.

Another reason I’m so hesitant is that I’ve seen other packages inherit np.ndarray for this, but I’m not sure at all it’s a good idea to do such a thing.

I tried to be as articulate and succinct as possible, looking forward to reading your opinions on this!